Data Centric AI vs Model Centric AI

The structure of modern applied AI stands on the bricks of data. For a good AI/ML practice, a solid data practice is the key. This has led to a massive movement called Data Centric AI, from pioneers of AI like Prof. Andrew Ng. According to Ng (paraphrasing), it gives more gains by enhancing your data (in quantity and quality), instead of trying to build or improve the quality of data. And this has been true across industry. For instance, when asked on how google search did so well, director Peter Norvig said, “We don’t have better algorithms. We have more data.” And it makes sense, since data is called new oil. However, that oil needs to be processed by an engine. Hence, there is another camp, led by Dr Judea Pearl, that professes ‘mind over data.’ This brings us to Model Centric AI where, the focus is the model.

Typically, Data Centric has always taken precedence in the Industry, whereas academia is more model centric. It makes sense since, Industry is more driven towards results, rather ingenuity. However, there aren’t hard boundaries. There have been cases where the contrary is true as well. Pioneers like Andrew Ng have taken Data Centric AI to research labs while industry has produced powerful models like transformers.

But, Data Centric AI or Machine Learning in practice suffers one major drawback i.e. lack of generalization. ML practitioners usually fret over Data Drift/Concept Drift. We know that the real world is constantly changing. Hence, the resulting data changes and pattern changes is called Drift. To know more about drift, read this.

Ladders of Causation

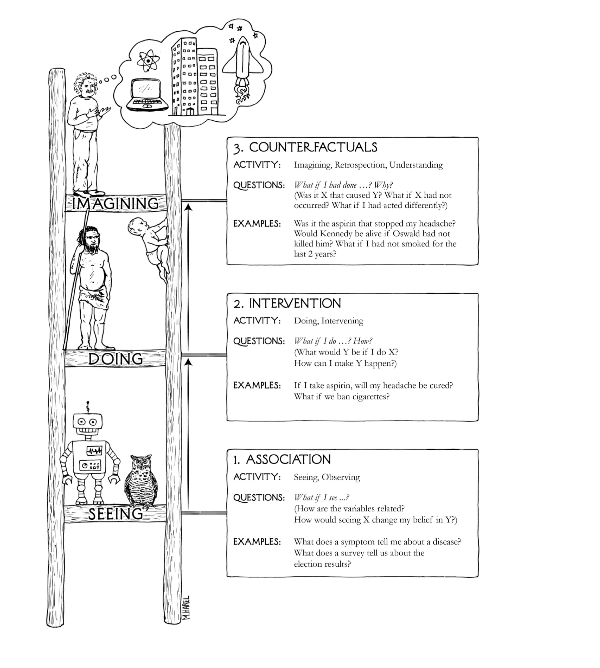

But why does the Drift bother ML practice? Because most of the ML algorithms are associational. They primarily learn from associations in data, also called correlations. But, in reality intelligence is not only about finding associations/observations. It is also about experimentation and imagination. This is also called Intervention and Counterfactuals. The below image from Dr. Judea Pearl’s Book of Why elaborates on the ladders of causation.

A look at the self-explanatory image tells you that ML models(including Deep Learning) are still stuck in the first level or rung of the ladder. They are no better than animals even though many of the modern algorithms give human level performance. However, when faced with an out of the world scenario, these models fail, since all they learnt is associations.

However, human intelligence has evolved over millions of years by doing/experimenting and imagining. AI algorithms cannot do this. This is precisely the reason why ML models do not adapt well to out of distributions scenarios, thus making the case for Causality in modern AI.

Conclusion

We leave you with this for introspection and stress more on the mind over data(information). Causality is an active area of research. Thus, tools and techniques are not matured yet. Hence, stay tuned to our blog for more on this topic.

Remember: Correlation does not mean Causation. However, some Correlations do imply Causation.